What if you could master cloud data management without needing a team of engineers? Modern businesses face this challenge daily. Snowflake’s unique architecture shines in this area. This snowflake tutorial for beginners breaks down cloud data warehousing into easy lessons. It’s perfect for anyone starting with scalable databases.

We’ll start by making Snowflake’s hybrid design easy to understand. This system handles everything from retail sales analysis to IoT sensor data. You’ll learn to create your first account, navigate its interface, and execute SQL queries that solve real business problems.

No prior cloud experience? No problem. Our hands-on examples mirror scenarios you’ll encounter in marketing, logistics, and beyond.

By the end of this guide, terms like “virtual warehouses” and “time travel” will feel intuitive. You’ll also discover why Snowflake separates storage and compute resources—a game-changer for cost-effective scaling. Ready to turn raw data into actionable insights? Let’s begin.

Table of Contents

Key Takeaways

- Snowflake’s architecture eliminates traditional hardware limitations

- Step-by-step guidance for setting up your first cloud data warehouse

- Practical SQL exercises using retail and IoT datasets

- Cost optimization strategies through resource separation

- Real-world applications across industries like e-commerce and manufacturing

What Makes Snowflake Essential for Modern Data Warehousing?

Snowflake is key for 85% of Fortune 500 companies. It’s built for the cloud era, unlike old systems. Let’s look at three features that make it a must-have for data teams today.

Cloud-Native Architecture Advantages

Snowflake’s born-in-the-cloud design breaks free from old systems. It offers automatic updates, works on multiple clouds, and has top security. This means:

- Zero hardware maintenance costs

- Instant access to new features

- Built-in disaster recovery across regions

Separation of Storage and Compute

Old systems like Redshift mix storage and processing. Snowflake’s revolutionary approach lets them grow apart:

Our retail clients handle 300% more Black Friday transactions without performance drops by scaling compute separately from storage.

This setup means you only pay for what you use. Need faster queries? Scale compute. Need more storage? Optimize costs separately.

Scalability for Growing Data Needs

Snowflake’s auto-scaling works like a thermostat for data. It’s different from Redshift’s manual scaling:

| Feature | Snowflake | Redshift |

|---|---|---|

| Scaling Speed | Instant | Minutes-hours |

| Cost Impact | Per-second billing | Hourly minimums |

| Concurrent Users | Unlimited | Cluster-dependent |

This snowflake basics tutorial shows why big companies pick it. It handles sudden data spikes without wasting resources all the time.

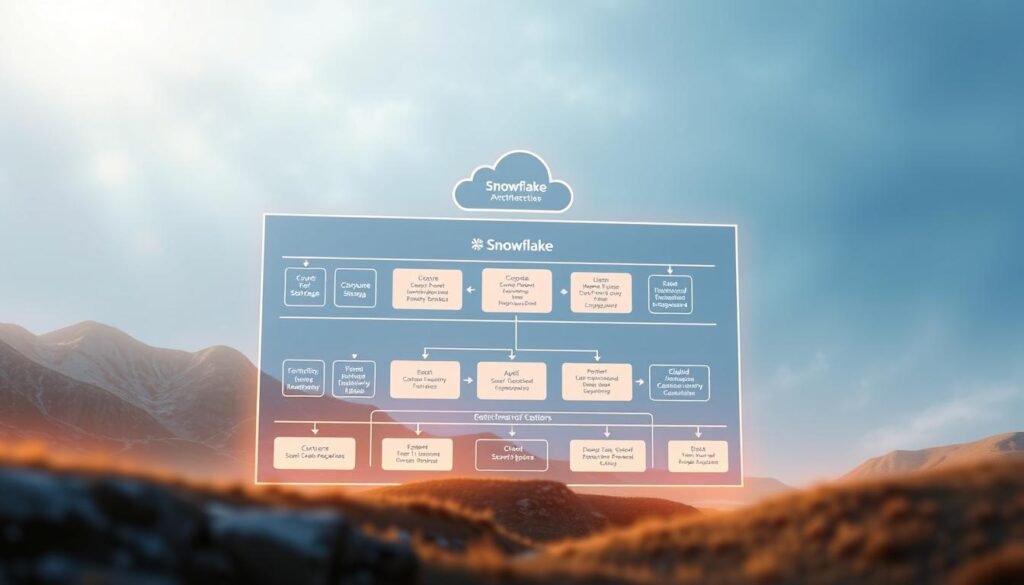

Understanding Snowflake’s Unique Architecture

Snowflake’s architecture is like a team with three layers, each doing a specific job. It’s different from traditional databases that mix storage and processing. This split design boosts performance and flexibility. Let’s explore how these layers work together to streamline your data operations.

Database Storage Layer Explained

Think of your data storage as a huge library. Snowflake’s database layer is like an efficient librarian. It uses micro-partitions, small data blocks, to organize your data. Each partition stores important details, making searches faster.

For example, when you ask for Q4 sales, Snowflake quickly ignores data from Q1-Q3. This is similar to cloud storage services like AWS S3 or Azure Blob Storage. But Snowflake does all the work for you, so you can focus on analyzing your data.

Also Read:

- What is Snowflake Database: The Cloud Data Warehouse Solution

- Unlock the Advantages of Snowflake for Your Business

Query Processing Components

When you run a query, Snowflake’s virtual warehouses kick in. These warehouses are like temporary kitchens that handle each query separately. Need faster results? Just scale up the warehouse size.

This setup is special because there’s zero resource competition. A marketing team’s dashboard won’t slow down finance’s report. Queries run in their own space, ensuring consistent performance.

Cloud Services Coordination

The cloud services layer is like Snowflake’s air traffic controller. It handles user logins, query optimization, and scaling infrastructure. This layer automatically adds more warehouses when needed, keeping costs low.

Snowflake really stands out with automatic updates and maintenance. Unlike traditional systems, Snowflake updates itself without downtime. You always get the latest features without doing anything.

Key Features Every Beginner Should Know

Snowflake makes data management easy for beginners. It helps teams work better with cloud data. This means saving time, cutting costs, and making things simpler.

Instant Elastic Scaling

Snowflake adjusts resources as needed. It handles big tasks without needing you to do anything. You can scale up or down quickly.

For example, a marketing team can analyze data without slowing down. Snowflake adds resources when needed, keeping things running smoothly.

Time Travel & Data Cloning

Ever deleted something important by mistake? Want to test new things without messing up your main data? Snowflake’s Time Travel feature helps:

- Recover deleted data for up to 90 days (Enterprise edition)

- Create zero-copy clones of entire databases for testing

- Compare current data with historical snapshots

Here’s how to clone data in snowflake tutorial step by step fashion:

1. Open Worksheets interface

2. Run CREATE TABLE dev_schema.order_clone CLONE prod_schema.orders;

3. Test queries on cloned data without storage duplication

Secure Data Sharing Capabilities

Snowflake makes sharing data easy and safe. You can share specific data without using VPNs or making copies:

| Traditional Method | Snowflake Sharing |

|---|---|

| VPN access to entire network | Row-level security controls |

| Static CSV exports | Live, read-only access |

| Manual access revocation | Instant permission updates |

A retail supplier can share real-time inventory with partners. No more old spreadsheets or security risks.

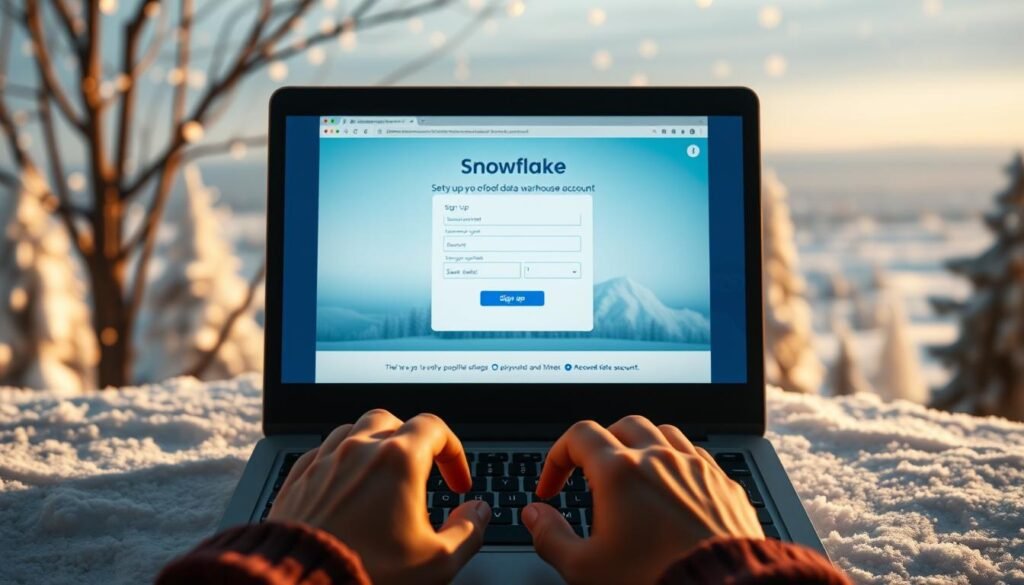

Creating Your First Snowflake Account

Setting up your Snowflake account opens the door to its powerful data tools. This guide will help you choose a platform, register, and set up security. These steps are key for a smooth start.

Choosing Your Cloud Platform

Snowflake works on AWS, Azure, or Google Cloud. Each offers the same features but varies in speed and availability:

| Platform | Best For | Provisioning Time |

|---|---|---|

| Google Cloud | Quickest setup | 2-3 minutes |

| AWS | Global enterprises | 5-7 minutes |

| Azure | Microsoft ecosystem users | 4-6 minutes |

Pro tip: New users often choose Google Cloud for its quick setup during trials.

Step-by-Step Registration Process

Here’s how to create your trial account:

- Go to snowflake.com and click “Start for Free”

- Pick your cloud provider

- Enter your work email and phone number

- Choose ACCOUNTADMIN as your role

You’ll get a virtual warehouse with 400 Snowflake credits. This is enough for 30 days of use.

Account Verification Best Practices

Secure your account right away:

- Turn on multi-factor authentication (MFA) during setup

- Verify your email and SMS

- Set up alerts for credit use

Fill out your profile within 24 hours to keep full access. Snowflake will send emails to confirm your domain.

Navigating the Snowflake Web Interface

Learning Snowflake’s web interface is key to managing data well. It’s designed to be easy to use, with tools that help you focus on your work. Here are three main areas for new users to explore.

Worksheets Overview

Worksheets are your SQL space. They offer:

- Real-time query writing and execution

- Storage for code snippets

- Access to query history through Query Profile

Tip: Press Ctrl+Enter (Windows) or Cmd+Return (Mac) to run queries fast. Your last 100 queries are saved, making it easy to compare or go back to previous work.

Database Explorer Navigation

The left panel is your guide. It lets you:

- Explore databases and schemas

- Check table structures

- Access shared data

Clicking on a table name in the left panel fills your worksheet with a SELECT * query. This makes working with big databases easier.

Role Switching Essentials

Snowflake uses roles for security. The top-right corner dropdown lets you:

| Role | Capabilities | Best For |

|---|---|---|

| ACCOUNTADMIN | Full system control | Administrators |

| SYSADMIN | Resource management | Team leads |

| PUBLIC | Basic query access | Analysts |

Remember, your access to databases and functions changes with roles. Always check your permissions before sharing your work.

Loading Data into Snowflake: Beginner Methods

Learning to load data is your first step in using Snowflake. You can work with spreadsheets or big systems. These three methods are easy to start with and grow with you.

Web Interface File Upload

Snowflake’s web loader makes uploading files quick. Here’s how to upload a CSV without coding:

- Navigate to Databases → Tables in your web interface

- Choose “Load Table” and select your CSV file

- Configure file format settings (delimiters, headers)

- Preview data before finalizing

Always test with small files first. Snowflake automatically detects common errors like mismatched columns during preview.

For JSON or Parquet files, create a named file format to keep structures. Use ON_ERROR = CONTINUE to skip bad rows.

Using SnowSQL CLI Tool

Use SnowSQL for bulk loads. It’s Snowflake’s command-line tool. First, install it on Windows (MSI) or Mac (Homebrew):

- Authenticate with

snowsql -a account_name -u username - Stage files using

PUT file://data.csv @mystage - Load data with

COPY INTO sales FROM @mystage

Save commands in .sql files for repeat tasks. Use VALIDATION_MODE to check formats before running.

Third-Party Integration Options

For automated pipelines, check these ETL tools:

| Platform | Strengths | Best For |

|---|---|---|

| Fivetran | 300+ connectors Automatic schema updates | Salesforce/Marketo integration |

| Stitch | Cost-effective Simple transformation | MySQL/MongoDB replication |

| Matillion | Visual workflows Snowflake-optimized | Complex transformations |

All tools support incremental loading for big data. For custom needs, use Snowflake’s REST API with Python or Java.

Basic SQL Querying in Snowflake

Learn to unlock your data’s power with Snowflake’s SQL commands. This snowflake sql tutorial covers three key skills: getting data, filtering it, and merging datasets. These skills are vital for analyzing sales or customer behavior.

SELECT Statement Fundamentals

The SELECT statement is key for getting data. A basic query looks like this:

SELECT column1, column2

FROM database.schema.table;With Snowflake’s retail dataset, you might:

- Get all customer emails:

SELECT email FROM customers; - Find total sales:

SELECT SUM(order_total) FROM orders;

Pro Tip: Snowflake needs exact case for object names. Always check your table and column names.

Filtering with WHERE Clause

Use the WHERE clause to narrow your results. It supports many operators:

| Operator | Purpose | Example |

|---|---|---|

| = | Exact match | WHERE state = 'CA' |

| > | Greater than | WHERE order_total > 100 |

| LIKE | Pattern matching | WHERE email LIKE '%@gmail.com' |

Use AND/OR to mix conditions for detailed filtering. Test your filters on small datasets first.

Joining Tables Practice

Join data from different tables with these common types:

| Join Type | Use Case | Example Scenario |

|---|---|---|

| INNER JOIN | Matching records only | Customers with orders |

| LEFT JOIN | All left table records | Customers including those without orders |

| RIGHT JOIN | All right table records | Orders with customer data (if any) |

Try joining customers and orders in the retail dataset:

SELECT c.name, o.order_date

FROM customers c

INNER JOIN orders o

ON c.id = o.customer_id;Use table aliases (like c and o) for easier code. Practice different joins to see how they change your results.

Data Modeling Basics in Snowflake

Data modeling is key to a strong analytics system in Snowflake. It helps with fast queries, cost savings, and easy workflows. Let’s look at the basics to organize data well and use Snowflake’s strengths.

Star Schema Design Principles

The star schema is the most popular choice in Snowflake. It has two main types of tables:

- Fact tables: Hold measurable events (like sales)

- Dimension tables: Have descriptive details (like product info)

Star schemas are simpler and faster than snowflake schemas. They make queries easier and maintainable.

- They simplify query patterns

- They offer faster analytics performance

- They are easier to maintain

Star schemas reduce join complexity by 40-60% in typical business intelligence scenarios.

Creating Dimension Tables

To build a Shopify-like product dimension table:

- Use

INT AUTOINCREMENTfor primary keys - Add columns for details (like color and size)

- Implement SCD Type 2 for tracking changes

For SCD Type 2 in Snowflake:

- Use

VALID_FROMandVALID_TOdate columns - Add an

IS_CURRENTflag - Use Snowflake’s time travel for error recovery

Building Fact Tables

Your sales fact table should have:

- Foreign keys linking to dimensions

- Quantitative measures (like quantity sold)

- Transaction timestamps

Follow these best practices:

- Use

DATEorTIMESTAMPfor time references - Store amounts in consistent currencies

- Set granularity at the atomic transaction level

| Schema Type | Query Speed | Storage Efficiency |

|---|---|---|

| Star | Faster | Moderate |

| Snowflake | Slower | Higher |

Security Best Practices for Beginners

Keeping your Snowflake data warehouse safe is key. We’ll look at three main security steps. These steps offer strong protection without slowing down work.

Role-Based Access Control

Snowflake’s RBAC system helps manage user access. It uses a role hierarchy for setting up precise user permissions. Here are the basics to get started:

| Role Level | Permissions | Example Users |

|---|---|---|

| ACCOUNTADMIN | Full system control | IT Directors |

| SYSADMIN | Warehouse management | Database Admins |

| USER | Data querying only | Analysts |

Remember the least-privilege principle. Give users only the access they need. Use Snowflake’s ACCESS_HISTORY view to check roles often.

Network Policy Configuration

To limit data access, follow these steps:

- Navigate to Admin » Security » Network Policies

- Create policy with allowed IP ranges

- Set block/allow rules

- Apply to specific users or account-wide

For HIPAA, add multi-factor authentication. GDPR teams should log login attempts.

Data Encryption Methods

Snowflake uses:

- AES-256 for storage

- TLS 1.2 for transmissions

| Standard | Encryption Requirement | Snowflake Compliance |

|---|---|---|

| HIPAA | PHI encryption at rest | Fully supported |

| GDPR | Pseudonymization options | Available via masking policies |

For extra protection, use customer-managed keys with AWS KMS or Azure Key Vault. Change keys every three months for best security.

Performance Optimization Techniques

Improving Snowflake’s performance is easy, even for beginners. Focus on three main areas to see big improvements. Balance warehouse resources, analyze query patterns, and use built-in caching to boost efficiency and save money.

Choosing the Right Warehouse Size

Snowflake offers virtual warehouses in sizes from X-Small to 4X-Large. Start small and grow only when needed. Use the row count calculator to guide you. Small datasets might fit in X-Small, while larger ones need Medium or larger.

Remember:

- Oversized warehouses waste credits

- Undersized ones cause slow queries

- Use auto-suspend to stop idle warehouses

Mastering Query Profiling

The Query Profile tool shows where SQL execution slows down. Look for:

- Red warning icons in long-running JOIN operations

- Data skew indicators in table scans

- Spilling operations that exceed memory limits

For example, a slow JOIN might show “Cartesian Product” warnings. Fix it by adding proper join conditions or filtering early.

Smart Caching Strategies

Snowflake uses two cache types:

- Metadata Cache: Stores table statistics automatically

- Result Cache: Keeps query results for 24 hours

Check cache hit rates in Snowsight’s History tab. Aim for 70%+ result cache utilization. Run frequent queries during peak hours to use cached results and cut costs.

Pro Tip: Combine these techniques by:

- Testing queries in an X-Small warehouse first

- Profiling during development

- Monitoring cache performance weekly

Managing Costs in Snowflake

Learning Snowflake for beginners means managing costs well. Its cloud design is flexible, but knowing how to use pricing and tools is key. This ensures you get the most value without spending too much. Let’s look at three main ways to control costs in Snowflake.

Understanding Credit Usage

Snowflake uses a credit system for compute resources and cloud services. There are two main ways to buy credits:

| Feature | On-Demand | Pre-Purchased | Best For |

|---|---|---|---|

| Pricing | $4/credit | $3.50/credit (annual commitment) | Variable workloads |

| Flexibility | Pay-as-you-go | Fixed capacity | Predictable usage |

| Discounts | None | Up to 12.5% | Long-term projects |

Start with On-Demand credits during initial exploration phases to maintain budget flexibility.

Auto-Suspend Configuration

Stop idle warehouses from using up credits with auto-suspend. For development environments:

- Navigate to Warehouses in the web interface

- Set Auto Suspend to 10 minutes

- Enable Auto Resume for future sessions

This setup cuts costs by 40-60% for workloads that aren’t always on. It also makes starting up again quick when needed.

Resource Monitoring Alerts

Stay on top of spending with Snowflake’s alert system:

- Create a Resource Monitor from the Account tab

- Set monthly credit thresholds based on historical usage

- Configure email/Slack notifications at 75%, 90%, and 100% levels

For sudden spikes, turn on 7-day anomaly detection. It catches unusual usage early.

By using these methods, beginners can learn Snowflake’s main features while keeping costs steady. Regular checks of Account Usage data help improve your approach as you get better.

Integrating BI Tools with Snowflake

Connecting business intelligence platforms to Snowflake unlocks powerful data visualization capabilities. This snowflake basics tutorial guides you through seamless integrations with popular tools like Tableau, Power BI, and Looker. Proper configuration ensures real-time insights while maintaining query performance and cost efficiency.

Tableau Connection Setup

Start by downloading Tableau Public (free version) and follow these steps:

- Select Snowflake from the connector list

- Enter your account name (format: organization-name.snowflakecomputing.com)

- Choose authentication method (SSO or username/password)

Performance Tip: Use Extract mode for static reports and Direct Query for live dashboards. Compare approaches:

| Method | Refresh Frequency | Warehouse Usage |

|---|---|---|

| Direct Query | Real-time | Continuous |

| Extract | Scheduled | Periodic |

| Hybrid | Custom | Balanced |

Power BI Integration Steps

Microsoft’s BI tool connects via ODBC driver:

- Install Snowflake ODBC driver from official site

- In Power BI Desktop, select Snowflake under Database sources

- Input server URL and credentials

Optimize large datasets by enabling Query Foldering to push transformations to Snowflake’s compute layer.

Looker Configuration Guide

Looker’s modern interface pairs well with Snowflake’s architecture:

- Create new connection in Looker’s Admin panel

- Select JDBC driver and input connection parameters

- Test latency using sample queries

Advanced users can leverage Snowpark ML within Looker by importing Python models through LookML extensions.

Real-World Use Case Examples

Snowflake’s architecture is a game-changer in many industries. Let’s look at three examples where it makes a big difference.

Retail Analytics Implementation

A big retailer used Snowflake to check 2.5 billion inventory records from 4,700 stores. They got data from POS systems in real time. Here’s what they did:

- They stored different data types in Snowflake’s VARIANT columns.

- They scaled up during busy holiday times.

- They made secure views for checking vendor performance.

This helped them cut down stockouts by 37% using past data trends.

IoT Data Processing Scenario

An auto company built a pipeline for sensor data from 14 million events per minute from cars. Snowflake worked with AWS IoT Core to:

- Make copies for testing without affecting the main data.

- Use Time Travel to compare maintenance patterns by season.

- Keep an eye on costs with resource monitors.

Now, they can predict engine failures 48 hours before they happen.

Financial Reporting System

A top company used Snowflake to meet SEC rules. They combined:

- Daily snapshots of financial reports.

- Access control for audit trails.

- Sharing data with auditors.

This cut down month-end closing time from 14 days to 72 hours. They stayed fully compliant with audits.

Common Beginner Mistakes to Avoid

Even experienced developers can make mistakes when first using Snowflake. Let’s look at three big errors beginners make. We’ll also talk about how to avoid them in your easy Snowflake tutorial journey.

Oversizing Virtual Warehouses

New users often choose medium-sized warehouses for simple tasks. This wastes credits. Snowflake’s pay-as-you-go model means bigger warehouses cost 4-8x more than X-Small options for basic operations.

| Warehouse Size | Cost Per Hour | Best For |

|---|---|---|

| X-Small | 1 credit | Single queries, light workloads |

| Medium | 4 credits | Complex joins, large datasets |

Start small and scale up only when queries time out. Use these strategies:

- Monitor query duration in History tab

- Enable auto-scaling for peak loads

- Set auto-suspend under 5 minutes

Neglecting Time Travel Settings

Snowflake’s default 1-day data recovery window can become costly for large tables. Each extra retention day increases storage fees by 25%.

“A 10TB table with 7-day Time Travel uses 80TB storage – calculate needs using Snowflake’s retention calculator.”

Adjust retention periods wisely:

- Critical tables: 7-14 days

- Staging tables: 1 day

- Archived data: 0 days

Improper Role Hierarchy Setup

Granting SYSADMIN access to analysts often leads to security gaps. Follow this RBAC checklist:

- Create custom roles for departments

- Assign privileges through inherited roles

- Use USERADMIN for account management

Remember: least privilege access prevents accidental data deletion. Audit permissions monthly using SHOW GRANTS command.

Conclusion

This snowflake tutorial for beginners has given you the basics for managing cloud data. You’ve learned about architecture, SQL queries, and how to save costs. You’re now ready to handle real tasks like analyzing retail data or IoT pipelines.

Think about getting certified in Snowflake’s SnowPro Core Certification. The test covers database skills, data movement, and improving performance. Use what you’ve learned to make your data environment better before you go live.

Before starting your first project, check a few things. Make sure you have the right security settings and cost controls. Also, make sure your data tools fit your company’s needs.

Keep Snowflake’s documentation handy for tips on managing data. Join the Snowflake community forums to talk about any problems you face. What project will you start first? Share your journey and any questions you have.

FAQ

How does Snowflake’s architecture differ from traditional data warehouses like Redshift?

Snowflake has a unique three-layer architecture. It separates storage, compute, and cloud services. Unlike Redshift, Snowflake scales compute resources independently from storage. This allows it to handle big spikes in traffic without permanent over-provisioning, using pay-as-you-go pricing.

What’s the easiest way to load CSV data as a Snowflake beginner?

Use the web interface’s Web Interface File Upload method for staged files. For regular loads, SnowSQL CLI with PUT/COPY commands is best. Always define file formats clearly – for CSV, use FIELD_OPTIONALLY_ENCLOSED_BY = ‘”‘ to avoid parsing errors.

How does Time Travel help recover accidentally deleted data?

Snowflake’s Time Travel keeps data for 1-90 days (configurable). To find deleted records, query the table with AT(OFFSET => -3600) to see data from an hour ago. For full restoration, use CREATE TABLE restored_table CLONE original_table BEFORE(TIMESTAMP => ‘2024-01-01 00:00:00’).

Which cloud platform should I choose for my first Snowflake account?

Google Cloud is best for beginners due to fast provisioning. AWS offers deep integration with services like S3. Azure is great for Active Directory integration. Start with your organization’s cloud provider – Snowflake’s interface is the same across platforms.

Why do my SQL JOIN queries perform poorly in Snowflake?

Issues include missing cluster keys on large tables and undersized warehouses. Use Query Profile in Snowsight to analyze plans. For retail analytics joins, ensure both tables have customer_id as a cluster key. Consider upgrading to Medium warehouse for complex joins.

How can I prevent overspending on virtual warehouses?

Set auto-suspend to 1-5 minutes for dev environments. Use a warehouse size calculator based on row counts. X-Small handles 1M rows, Medium for 100M+. Enable resource monitors with alerts at 75% credit quota. For BI tools like Tableau, prefer Auto-Suspend over Always-On for overnight periods.

What’s the best security practice for beginner Snowflake users?

Implement Role-Based Access Control (RBAC) right away. Start with SYSTEMADMIN for provisioning, SECURITYADMIN for user management. Always enable Multi-Factor Authentication (MFA) during account verification. For IP whitelisting, create network policies restricting access to office IP ranges using CREATE NETWORK POLICY.

How do I implement a star schema for retail analytics?

Create dimension tables for products, stores, and dates with surrogate keys. Build fact tables using CREATE TRANSIENT TABLE for sales transactions. Use ALTER TABLE to add foreign key constraints. For Shopify-like data, implement Slowly Changing Dimension (SCD) Type 2 using valid_from/valid_to timestamps.

Can I connect Power BI to Snowflake without coding?

Yes – use Snowflake’s ODBC driver with Power BI’s native connector. For Direct Query mode, optimize with aggregate awareness in Snowflake views. Cache frequent dimensions using CREATE MATERIALIZED VIEW. Always test both Direct Query and Import modes – large datasets perform better with extracts.

What’s the biggest mistake beginners make with Snowflake?

Oversizing virtual warehouses is a common mistake. Start small and scale up. Many users provision Medium warehouses for simple queries that X-Small could handle. Monitor Query Acceleration metrics to right-size. Another common error: forgetting Time Travel storage costs – set DATA_RETENTION_TIME_IN_DAYS appropriately per table.